The American West

Contributors

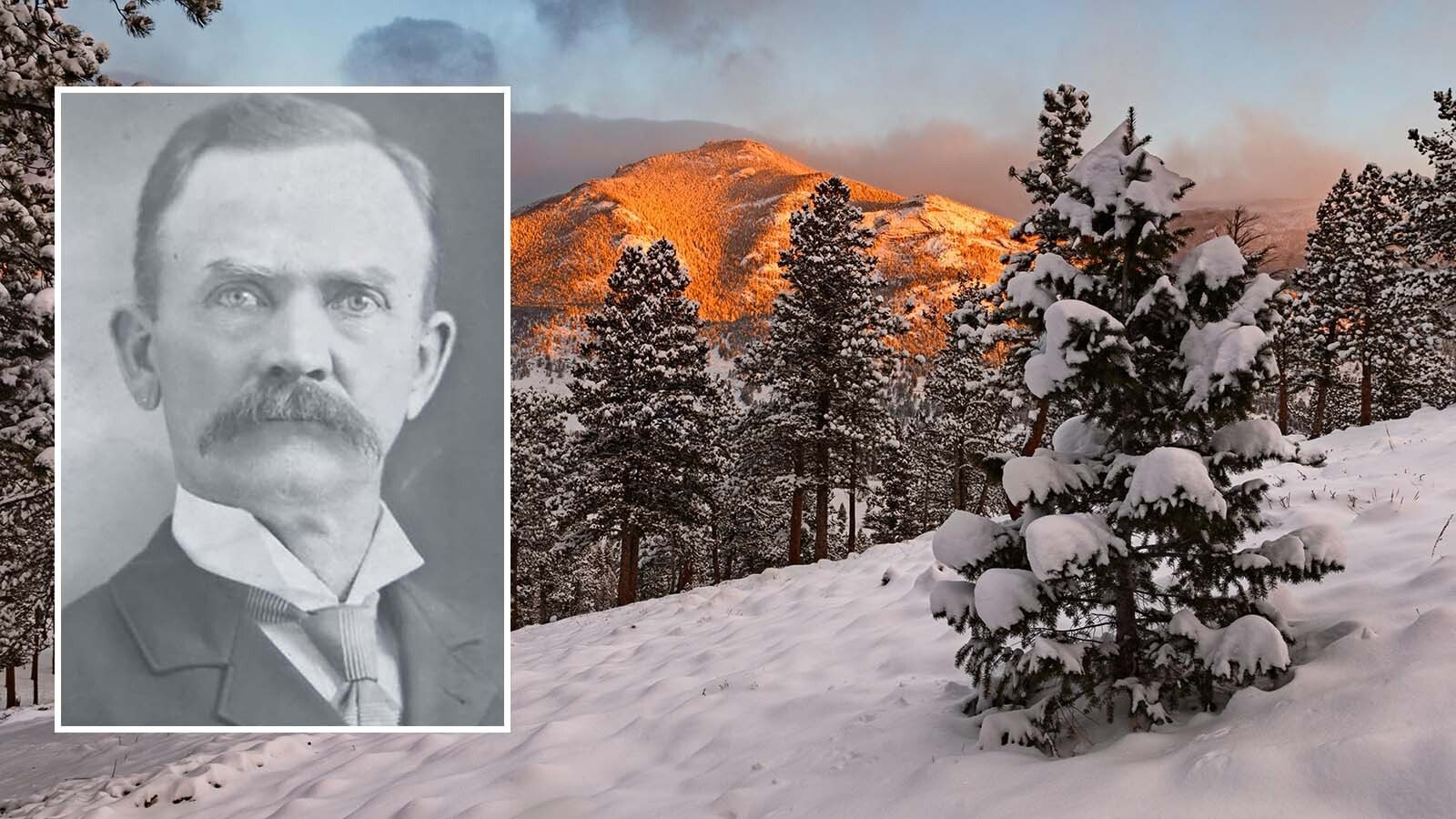

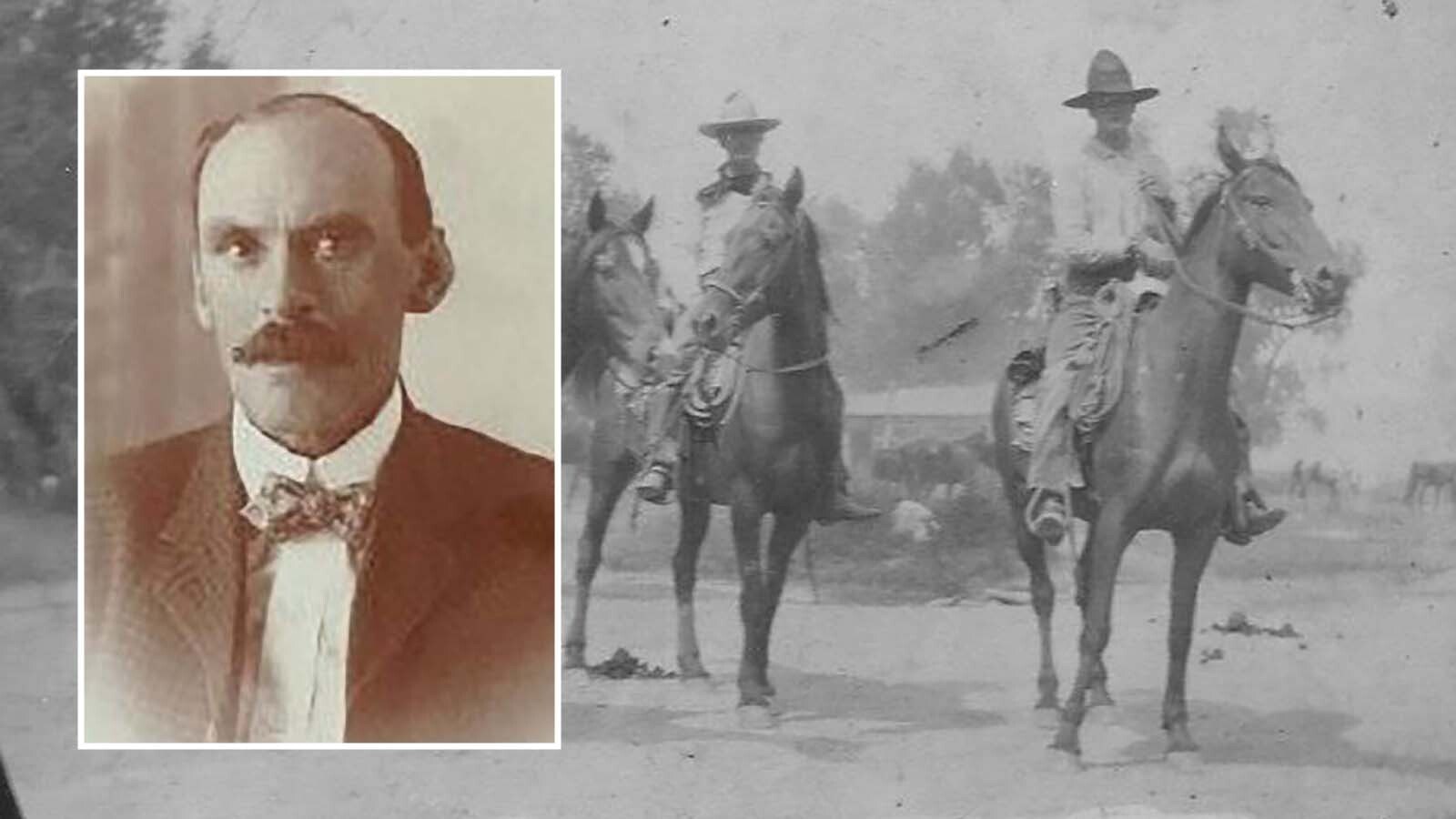

O.P. Hanna: Trapper, Indian Fighter Who Claimed First Homestead In Sheridan County

O.P. Hanna was a frontiersman, American Indian fighter and the first to claim a homestead in Sheridan County. He was prompted to write down his adventures after a meeting up with a Sioux warrior who was once his enemy.

Jackie DorothyDecember 01, 2025

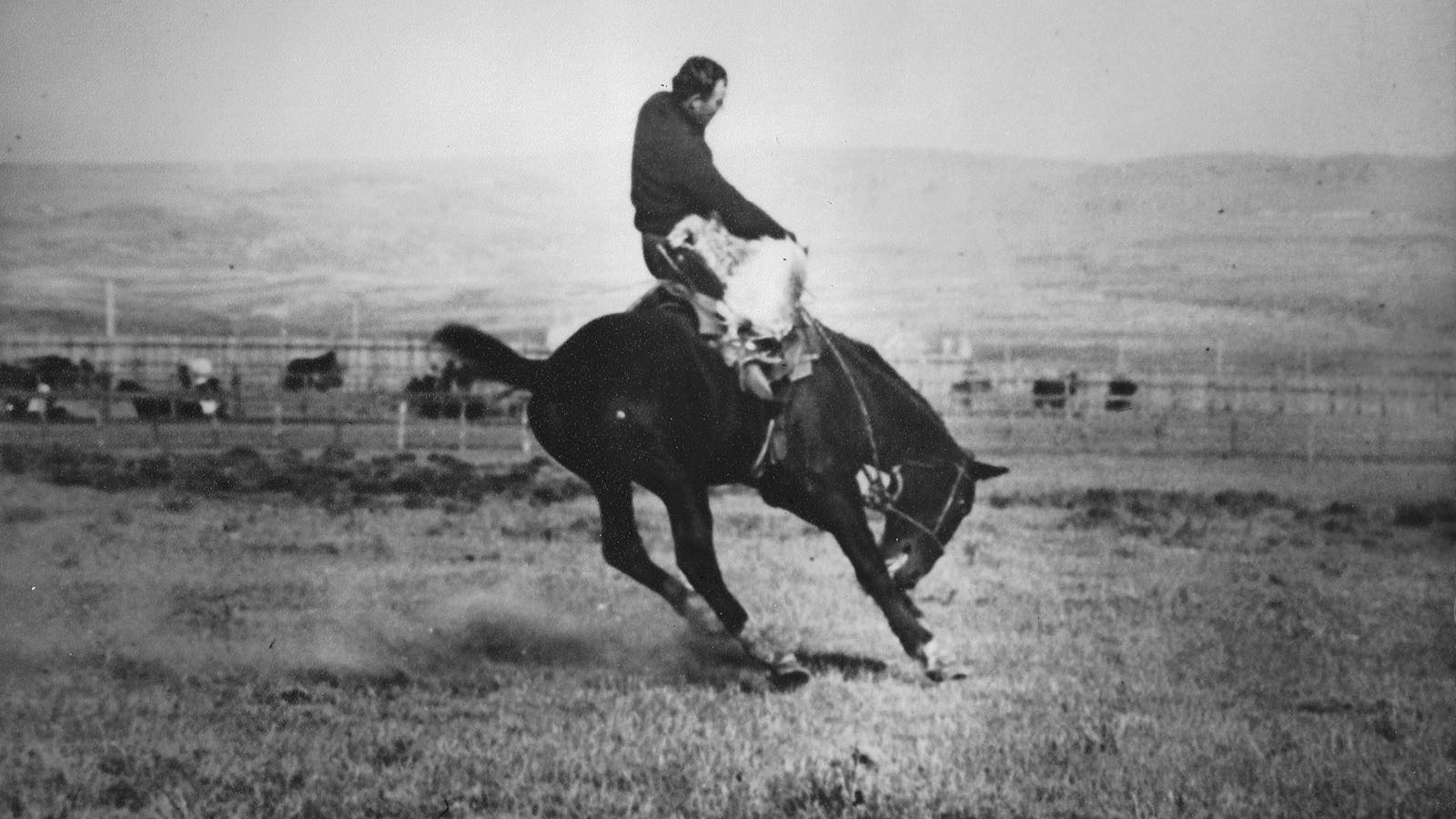

Who Is The Rider on Steamboat, Wyoming's Famed Bucking Horse?

Wyoming’s most enduring symbol is our license plate featuring the bucking horse named Steamboat. But who is the rider? In Pinedale, old timers may tell you it’s Guy Holt. In Lander, sentiment leans toward Stub Farlow. In Laramie, it's Jake Maring.

Candy MoultonNovember 28, 2025

Wyoming History: The British Adventurer Who Lost A Bet And Became A Cattle Baron

After losing a bet on a horse race, Moreton Frewen — who would become Winston Churchill’s uncle — declared he was moving to Wyoming to get into the cattle business. Along with his brother and a band of outlaws, Frewen became an unlikely cattle baron.

Jackie DorothyNovember 16, 2025

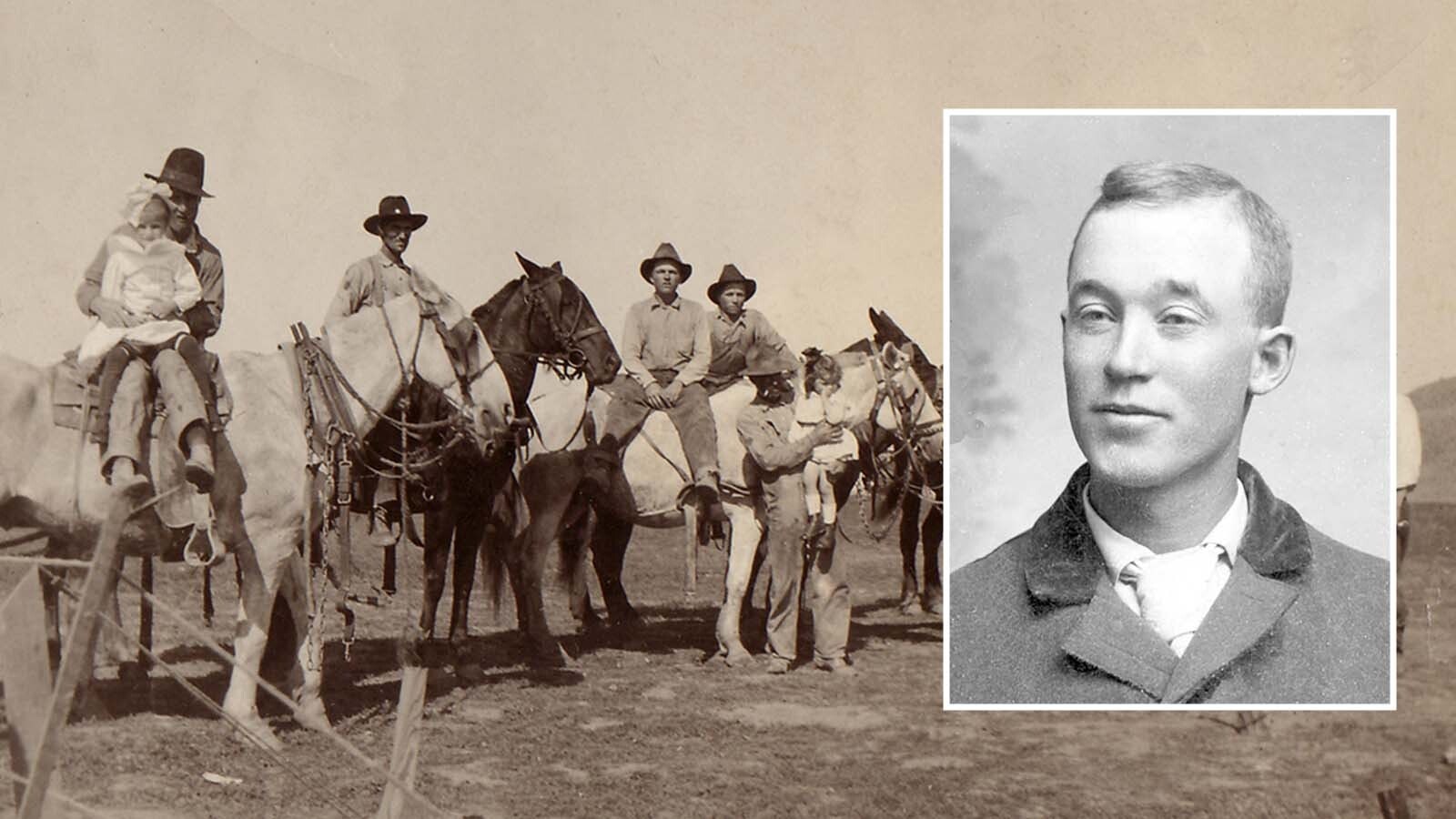

Wyoming Cowboy Hall of Fame: A. D. Kruse -- Life in the Saddle

Because ranch work is a year-around occupation, Wyoming Hall of Fame Cowboy 2025 inductee A. D. Kruse was in the saddle throughout the year. The Niobrara County rancher believed if it was too cold to do anything else, it was a good day to ride.

Candy MoultonNovember 16, 2025

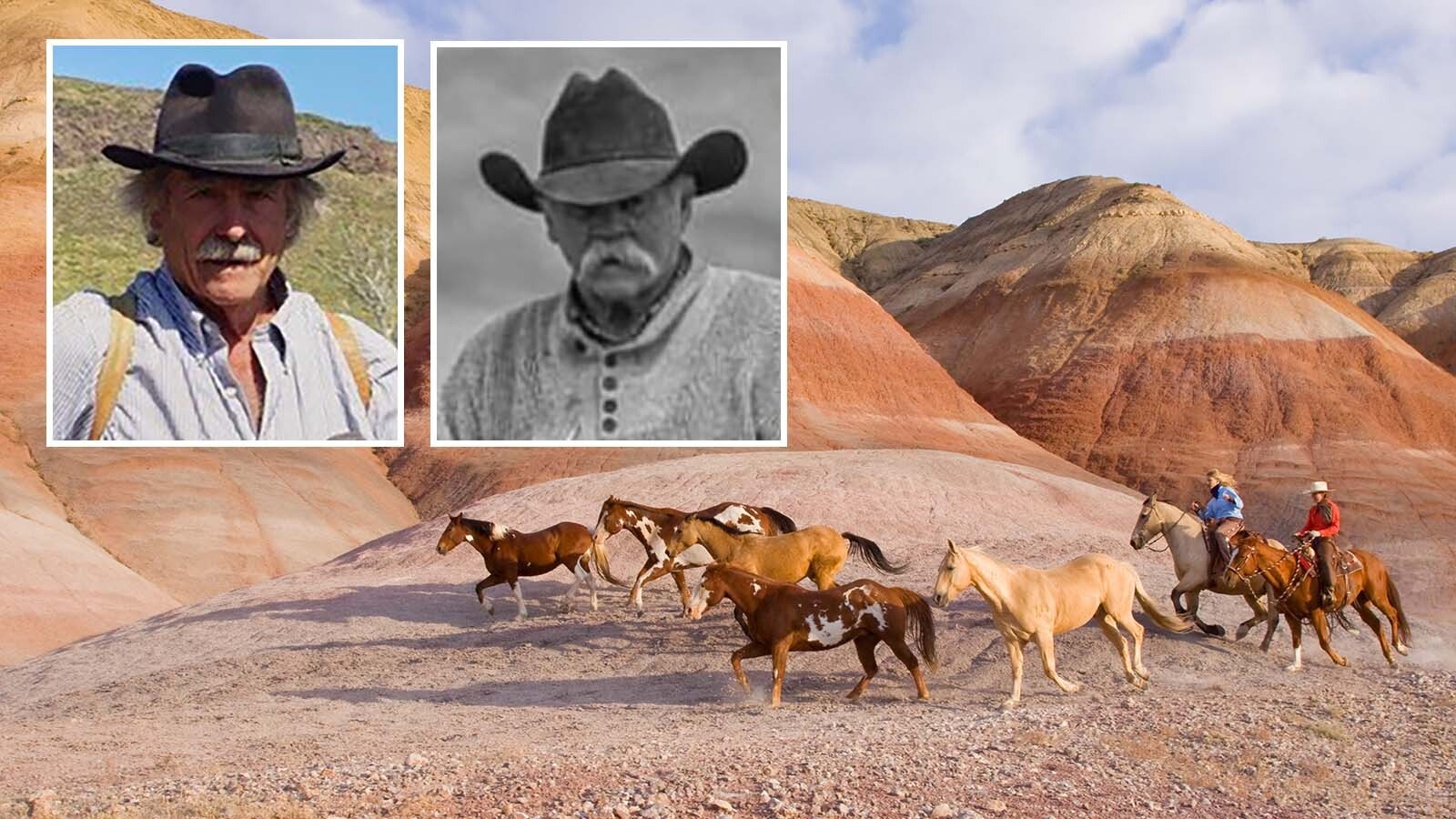

450-Mile Solo Horse Drive In 1984 Was One Of Wyoming's Last Over Outlaw Trail

Larry Bentley was hired to trail 30 quarter horses for 450 miles alone across Wyoming and into Colorado. It was 1984, and Bentley took these horses over the Outlaw Trail, where the Hole in the Wall gang used to trail their stolen horses 80 years before.

Jackie DorothyNovember 15, 2025

Wyoming History: Hudson’s Wild Legacy Of Brothels And Bootleggers

In the early 1900s until the 1960s, Hudson, Wyoming, was one of the liveliest towns in Fremont County, living up to a legacy of brothels and bootleggers. Even today, the town's website boasts that it had the most brothels in the region.

Jackie DorothyNovember 09, 2025

Wyoming History: Big Horn Loved A Pair Of Tame Elk, Until A Teacher Got Stomped

Back in the 1890s, a pair of tame elk delighted the townspeople of Big Horn, Wyoming. They even taught the elk how to pull wagons for them. Then one of them stomped the local schoolteacher and the love affair ended.

Jackie DorothyOctober 05, 2025

Wyoming History: That Time Old Jim Gregory Literally Talked A Man To Death

Fort Fred Steele in Carbon County was the setting for a legendary Old West Wyoming showdown. It was 1878 when Old Jim Gregory literally talked another man to death. When a doctor arrived, he looked at the body and said, “This is some of Gregory’s work."

Jackie DorothySeptember 14, 2025

Wyoming History: Nobody Crossed ‘Chatty’ Chatfield, The Toughest Cowboy Pioneer

Against a backdrop of lawlessness and frontier legend, Elmer “Chatty” Chatfield may have been the toughest of them all. Even at birth he wasn’t fazed when a windstorm sent a tent pole onto his head.

Jackie DorothySeptember 07, 2025

Wyoming History: How Two Oil Refineries Fueled U.S. WWII Aviation Dominance

Powering U.S. combat aircraft to success in World War II required 100-octane fuel and Wyoming was a key part of it. “Get ready Hitler, here comes more hell from Wyoming!” a Frontier Refining Company ad proclaimed on July 27, 1943.

Dale KillingbeckSeptember 01, 2025

Wyoming History: Sheridan Undersheriff Killed By Friendly Fire In 1914 Shootout With Outlaw

In 1914, a popular Sheridan undersheriff was killed in a three-way shootout with a notorious outlaw fresh out of prison and bent on revenge. An autopsy, however, showed he was killed by friendly fire.

Dale KillingbeckAugust 30, 2025

Wyoming History: That Time Bandits Robbed ‘Humpy The Boar-Ape’

The lanky Wyoming cowboy known as Irish Tom by some, and Humpy the Boar Ape by others, was robbed of a payroll stash in the late 1800s. But his recollection of the holdup didn’t quite fit the actual real robbery.

Jackie DorothyAugust 24, 2025

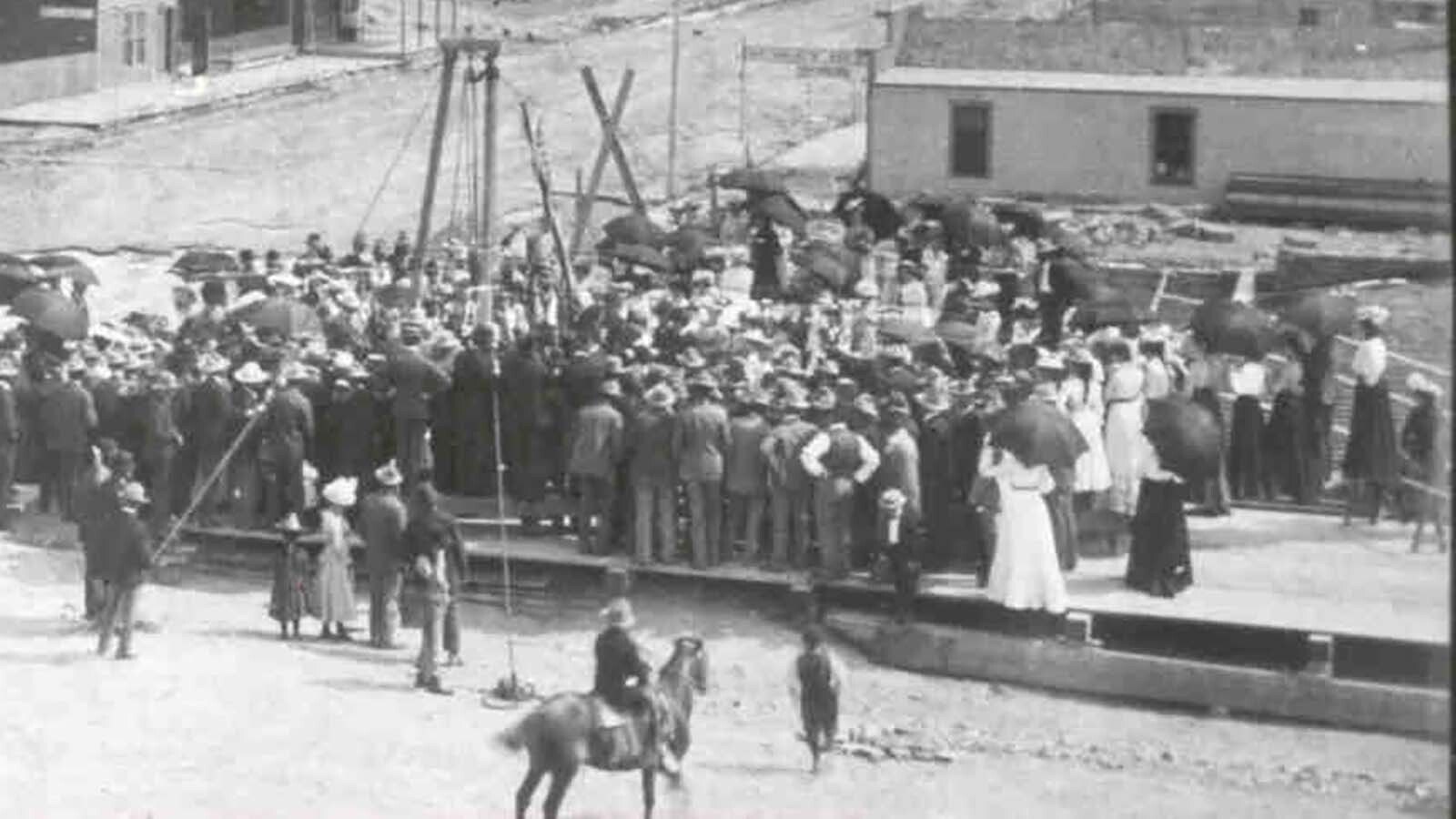

Wyoming History: That Time When 400 Coal Miners Went Vigilante To Hang A Man

In 1899, a Freemason murdered a coal miner in Glenrock, Wyoming. That fueled a mob of 400 miners who went vigilante, marched down the streets of Douglas to break the Freemason out of jail and then hung him.

Jackie DorothyAugust 23, 2025

Wyoming History: The Miners' Cure For Laziness At South Pass City

When a miner in 1868 tried to avoid working a claim in South Pass, his partners came up with a cure for his laziness. Getting him back to work involved a loaded rifle and the threat of Indian raids.

Jackie DorothyAugust 17, 2025

Wyoming History: The Nearly Forgotten 1892 Horse Wars To Exterminate Thieves

A brutal campaign against horse thieves unfolded across Montana, Idaho and Wyoming in 1892, nabbing notorious outlaws like Butch Cassidy and Jack Bliss. The Horse Wars were nearly forgotten to history until recently uncovered by an English historian.

Jackie DorothyAugust 10, 2025

Wyoming History: The Butting Dane’s Drunken, Murderous Badwater Creek Rampage

Fueled by chokecherry wine and a reputation for headbutting his way into trouble, a prospector with the nickname the "Butting Dane" went on a drunken, murderous rampage along Badwater Creek in 1932 that became infamous across Wyoming.

Jackie DorothyAugust 09, 2025

Wyoming History: Teddy Roosevelt Wanted Big Game In Yellowstone, But Settled For A Vole

During his famous 1903 two-week stay in Yellowstone National Park, President Teddy Roosevelt itched to hunt up some big game. Instead, he settled for scooping up a vole with his hat.

Dale KillingbeckAugust 03, 2025

Wyoming History: That Time Skunks Got Mad In The Tiny 2-Cell Jail In ‘Bloody’ Clearmont

Now part of the town playground, the tiny two-cell Clearmont jail has a bizarre history in a town once described as being “bloody.” Built to hold drunks and booze runners, its most infamous story comes from the time skunks got in — then got mad.

Dale KillingbeckAugust 02, 2025

Wyoming History: 250 Years Ago, Pivotal Battle Was Fought On Roundtop Mountain

Roundtop Mountain near Thermopolis, Wyoming, is now a popular hiking destination, but it’s also where the Shoshone are said to have gotten their first horses. That came from a pivotal battle fought there against settlers about 250 years ago.

Jackie DorothyJuly 27, 2025

Wyoming History: Cowboys Were Tough, Determined And Could Gossip Up A Storm

Surviving in the remote settlements of the Wyoming Territory in the late 1880s took grit, determination and getting into everyone’s business. Cowboys were tough, and also could gossip up a storm to combat loneliness and hard living.

Jackie DorothyJuly 26, 2025

Wyoming History: That Time Buddies Butch Cassidy And Jacob Snyder Were Framed

Jacob Snyder was a close friend of Butch Cassidy and, like Cassidy, claimed he was not an outlaw, but had been framed as a thief by a Wyoming ranch foreman with a grudge. History says maybe, maybe not.

Jackie DorothyJuly 20, 2025

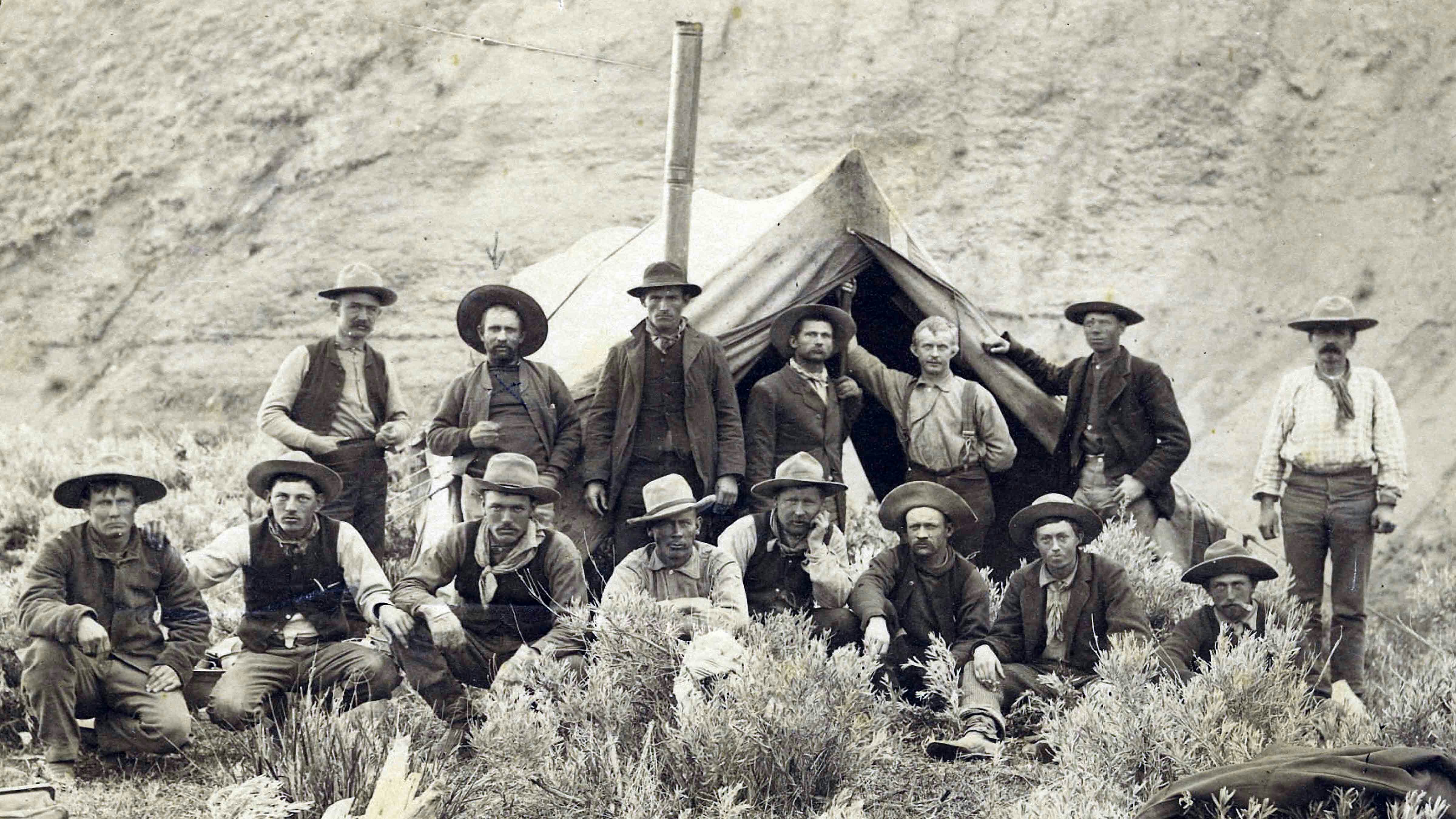

Wyoming History: New Photos Of Legendary Mountain Man Jim Baker Discovered

In what Western historians call an “extremely exciting” discovery, a trunk containing new photographs of the famous mountain man Jim Baker has been found. It also holds letters written to a lawman by Wild Bunch informants.

Renée JeanJuly 20, 2025

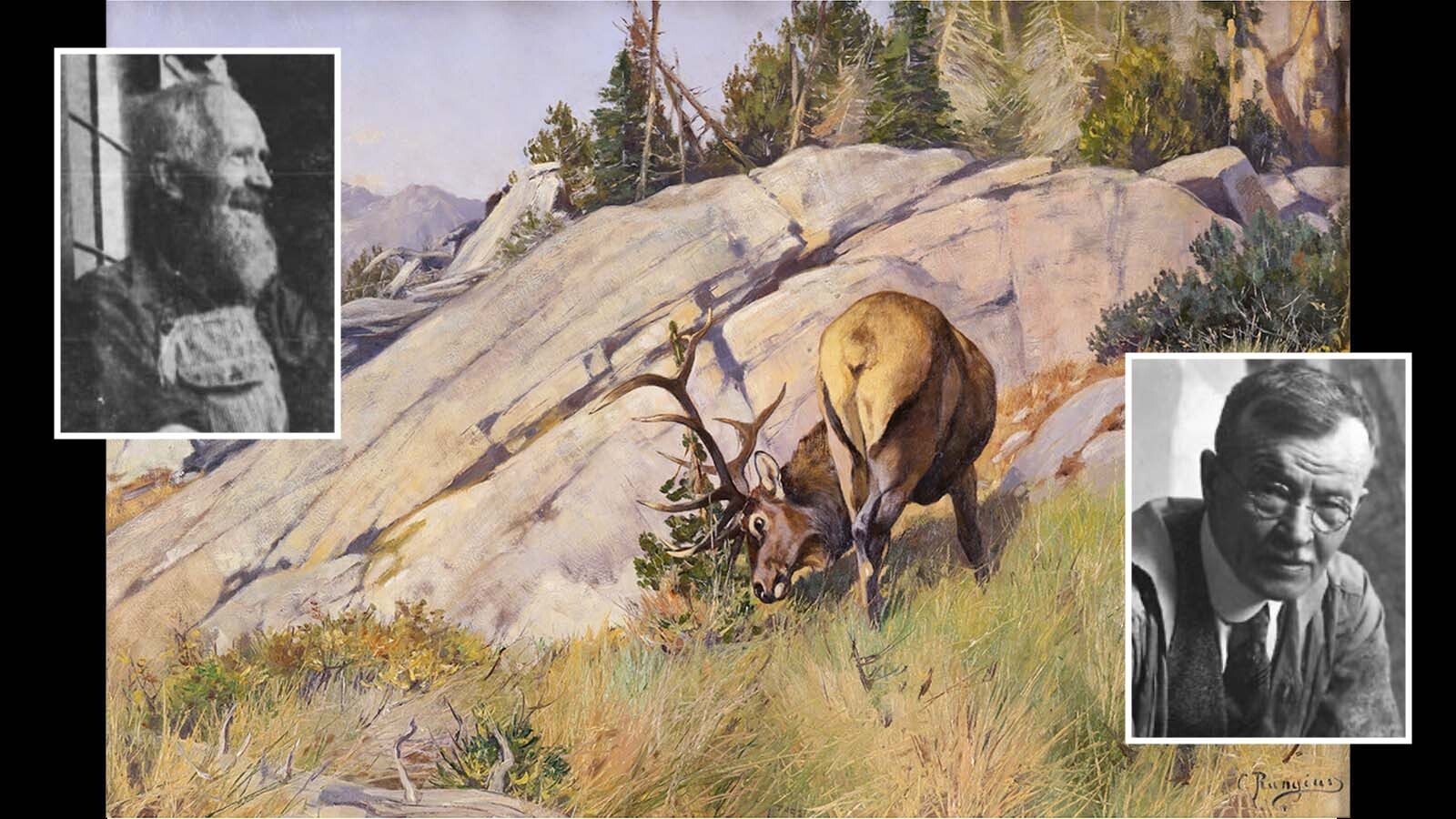

Wyoming History: Legendary Artist Gave Wyoming's First Game Warden Painting For Setting Broken Leg

Wyoming pioneering game warden Albert Nelson once set the broken leg of legendary wildlife artist Carl Rungius. The thankful artist gave Nelson a rare original painting that was later saved from the devastating 1927 Kelly Flood.

Dale KillingbeckJuly 13, 2025

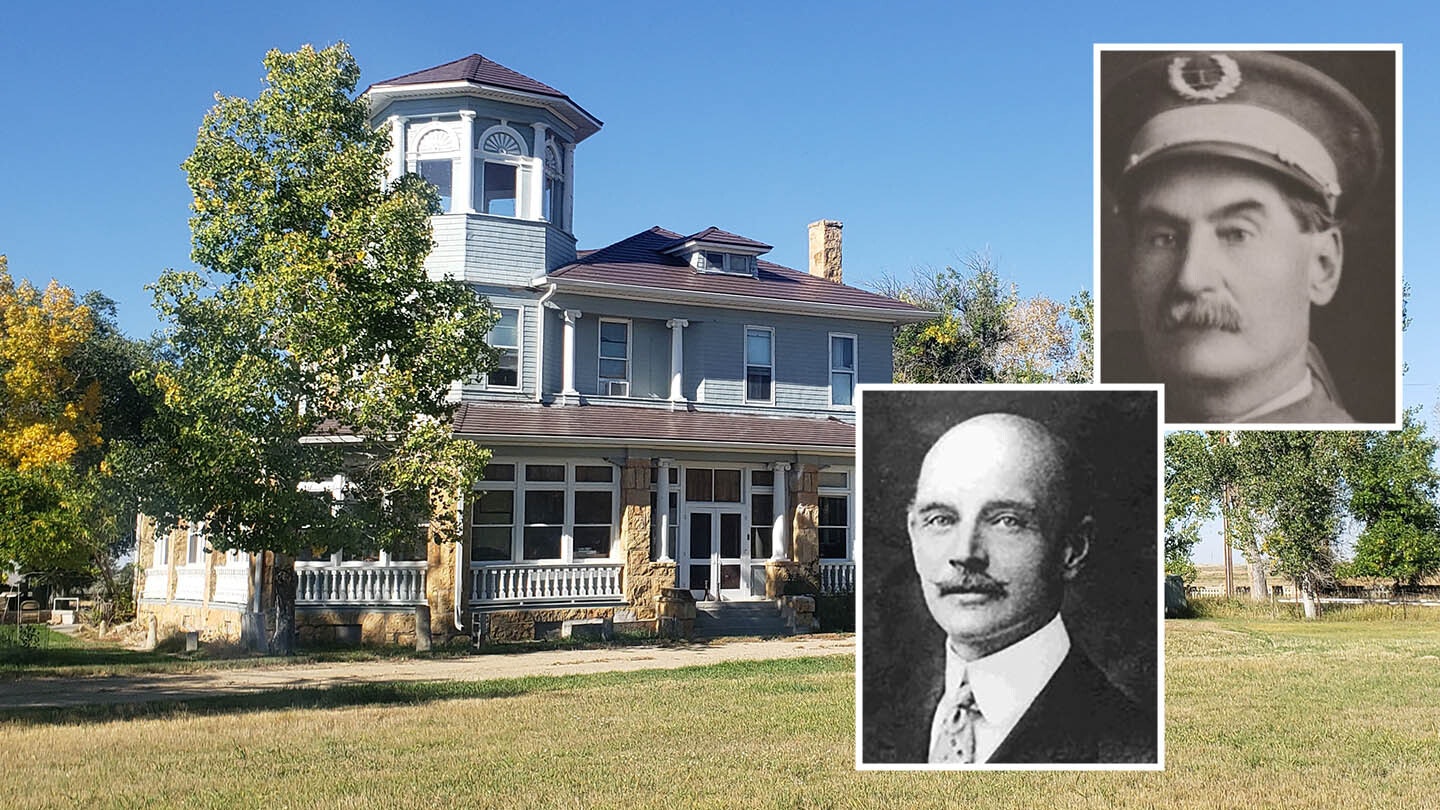

Wyoming History: Otto Chenoweth, The Gentleman Outlaw Of Lost Cabin

Sheep baron J.B. Okie was throwing a high-society party at his opulent mansion in Lost Cabin, Wyoming, when it was crashed by the sheriff and his prisoner. That prisoner was Otto Chenoweth, an outlaw horse thief who escaped and charmed the ladies as a dashing gentleman.

Jackie DorothyJuly 06, 2025